Her 6-Year-Old Son Told Her He Wanted to Die. So She Built an AI Company to Save Him

The burgeoning world of AI-powered mental health support is a minefield. From chatbots giving dangerously incorrect medical advice to AI companions encouraging self-harm, the headlines are filled with cautionary tales.

High-profile apps like Character.AI and Replika have faced backlash for harmful and inappropriate responses, and academic studies have raised alarms.

Two recent studies from Stanford University and Cornell University found that AI chatbots often stigmatize conditions such as alcohol dependence and schizophrenia, respond “inappropriately” to certain common and “encourage clients’ delusional thinking.” They warned about the risk of over-reliance on AI without human oversight.

But against that backdrop, Hafeezah Muhammad, a Black woman, is building something different. And she’s doing it for reasons that are painfully personal.

“In October of 2020, my son, who was six, came to me and told me that he wanted to kill himself,” she recounts, her voice still carrying the weight of that moment. “My heart broke. I didn’t see it coming.”

At the time, she was an executive at a national mental health company, someone who knew the system inside and out. Yet, she still couldn’t get her son, who has a disability and is on Medicaid, into care.

“Only 30% or less of providers even accept Medicaid,” she explains. “More than 50% of kids in the U.S. now come from multicultural households, and there weren’t solutions for us.”

She says she was terrified, embarrassed and worried about the stigma of a child struggling. So she built the thing she couldn’t find.

Today, Muhammad is the founder and CEO of Backpack Healthcare, a Maryland-based provider that has served more than 4,000 pediatric patients, most of them on Medicaid. It’s a company staking its future the radical idea that technology can support mental health without replacing the human touch.

Practical AI, Not a Replacement Therapist

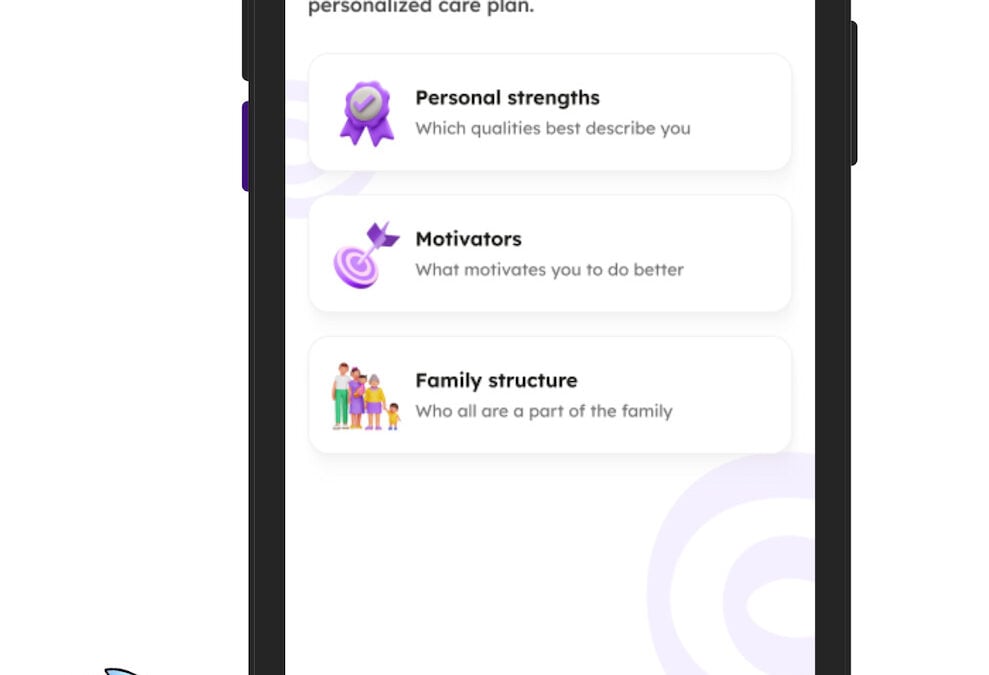

On paper, Backpack sounds like many other telehealth startups. In reality, its approach to AI is deliberately pragmatic, focusing on “boring” but impactful applications that empower human therapists.

An algorithm pairs kids with the best possible therapist on the first try (91% of patients stick with their first match). AI also drafts treatment plans and session notes, giving clinicians back hours they used to lose to paperwork.

“Our providers were spending more than 20 hours a week on administrative tasks,” Muhammad explains. “But they are the editors.”

This human-in-the-loop approach is central to Backpack’s philosophy.

The most critical differentiator for Backpack lies in its robust ethical guardrails. Its 24/7 AI care companion is represented by “Zipp,” a friendly cartoon character. It’s a deliberate choice to avoid the dangerous “illusion of empathy” seen in other chatbots.

“We wanted to make it clear this is a tool, not a human,” Muhammad says.

Investor Nans Rivat of Pace Healthcare Capital calls this the trap of “LLM empathy,” where users “forget that you’re talking to a tool at the end of the day.” He points to cases like Character.AI, where a lack of these guardrails led to “tragic” outcomes.

Muhammad is also adamant about data privacy. She explains that individual patient data is never shared without explicit, signed consent. However, the company does use aggregated, anonymized data to report on trends, like how quickly a group of patients was scheduled for care, to its partners.

More importantly, Backpack uses its internal data to improve clinical outcomes. By tracking metrics like anxiety or depression levels, the system can flag a patient who might need a higher level of care, ensuring the technology serves to get kids better, faster.

Crucially, Backpack’s system also includes an immediate crisis detection protocol. If a child types a phrase indicating suicidal ideation, the chatbot instantly replies with crisis hotline numbers and instructions to call 911. Simultaneously, an “immediate distress message” is sent to Backpack’s human crisis response team, who reach out directly to the family.

“We’re not trying to replace a therapist,” Rivat says. “We’re adding a tool that didn’t exist before, with safety built in.”

Building the Human Backbone

Beyond its ethical tech, Backpack is also tackling the national therapist shortage. In many cases, therapists, unlike doctors, traditionally have to pay out of pocket for the expensive supervision hours required to get licensed.

To combat this, Backpack launched its own two-year, paid residency program that covers those costs, creating a pipeline of dedicated, well-trained therapists. More than 500 people apply each year, and the program boasts an impressive 75% retention rate.

In 2021, then-U.S. Surgeon General Dr. Vivek H. Murthy has called mental health “the defining public health issue of our time” while referring at the time to the mental health crisis plaguing young people.

Muhammad doesn’t dodge the criticism that AI could make things worse.

“Either someone else will build this tech without the right guardrails, or I can, as a mom, make sure it’s done right,” she says.

Her son is now 11, thriving, and serves as Backpack’s “Chief Child Innovator.”

“If we do our job right, they don’t need us forever,” Muhammad says. “We give them the tools now, so they grow into resilient adults. It’s like teaching them to ride a bike. You learn it once, and it becomes part of who you are.”

- CỘNG ĐỒNG

- News

- Tech

- Food

- Causes

- Personal

- Art

- Crafts

- Dance

- Drinks

- Film

- Fitness

- Games

- Gardening

- Health

- Home

- Literature

- Science

- Networking

- Party

- Religion

- Fashion

- Sports

- Stars

- Xã Hội